The growing impact of energy on operational cost and system robustness becomes a strong motivation for improving energy efficiency in parallel computing systems, in addition to performance. Multi-core architectures are equipped with features to enable energy-efficient computing, such as core asymmetry and DVFS in CPU and memory subsystems. At the same time, task-based parallel programming models have gained popularity as an effective way to develop parallel applications. These models allow developers to express parallelism in the form of tasks and their dependencies, providing flexibility in execution.

Challenges in Energy-Efficient Task Scheduling

An efficient runtime scheduler plays a crucial role to achieve energy-efficient execution of a task-based application. It necessitates leveraging both architectural features and application characteristics. This involves:

- Assigning tasks to the most suitable core type (in core asymmetric architectures).

- Dynamically adjusting CPU and memory frequency based on workload characteristics.

- Adapting to different energy-performance trade-offs based on user or system requirements.

Moreover, not all architectural knobs may be available or exposed to the user. This makes it essential to design adaptive scheduling strategies that can operate efficiently across different hardware configurations. Therefore, our work focuses on adaptive task scheduling and resource management to improve energy efficiency in multi-core architectures, leading to two key publications [1][2].

Our Approach: An Adaptive and Model-Driven Energy-Aware Scheduler

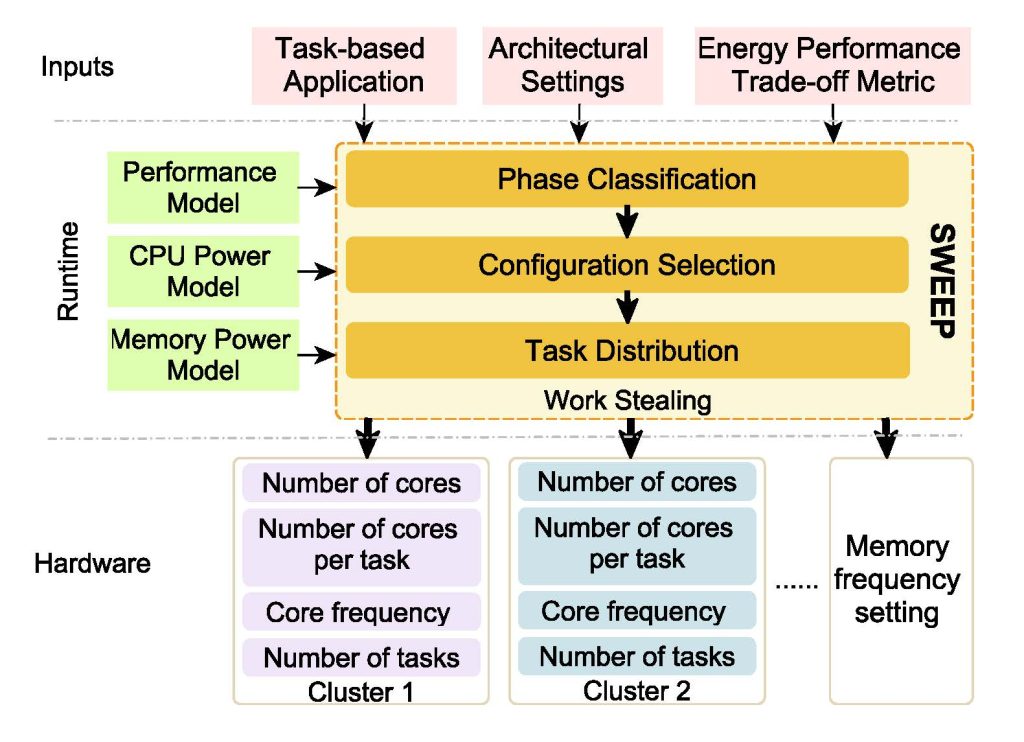

In the eProcessor project, we have developed a task scheduler that leverages

- Architectural factors: Core asymmetry, CPU DVFS, and memory DVFS

- Application characteristics: Inter-task parallelism, intra-task parallelism, and individual task properties

to improve the overall energy efficiency. We introduce a set of models built by multivariate polynomial regression to investigate the impact of tuning all these knobs on both performance and power consumption and facilitate the energy consumption prediction and select the best task schedule and DVFS settings. Additionally, the scheduler is designed to flexibly adapt to different energy-performance trade-off metrics, making it suitable for a variety of use cases. The evaluation shows that in comparison to the baseline greedy random work stealing, our scheduler achieves 28%, 41%, 55% reduction on EDP, ED2P and E2DP, respectively.

Integration with LLVM OpenMP Runtime

To extend the applicability of our scheduler, we integrated it into the LLVM OpenMP runtime for scheduling OpenMP taskloop constructs on multi-core architectures. To streamline the conversion of OpenMP for loops into taskloop constructs, we developed an automated Python tool that allows users to specify moldable parallel regions for better scheduling. Furthermore, we introduced a cost clause for the taskloop construct to enable efficient scheduling of taskloops with varying input sizes. Our extended LLVM OpenMP runtime is capable of dynamically selecting the optimal core type, number of cores, CPU frequency, and memory frequency for every parallel region targeting energy consumption, energy-delay-product metrics, etc.

References:

[1] Jing Chen, Madhavan Manivannan, Bhavishya Goel, and Miquel Pericàs, JOSS: Joint Exploration of CPU-Memory DVFS and Task Scheduling for Energy Efficiency, 52nd International Conference on Parallel Processing (ICPP) 2023.

[2] Jing Chen, Madhavan Manivannan, Bhavishya Goel, and Miquel Pericàs, SWEEP: Adaptive Task Scheduling for Exploring Energy Performance Trade-offs, 38th IEEE International Parallel and Distributed Processing Symposium (IPDPS) 2024.