In today’s high performance computer systems and particularly the more resource-intensive ones, like servers, the I/O transactions that read data from a hard disk or a network card, constitute a significant part of the overall workload, making them an essential part for a successful system in terms of performance. Most of today’s peripheral devices bypass the processor to minimize the usage of the Operating System (OS) Kernel and read and write directly from and to the System Memory using the Direct Memory Access (DMA) hardware units.

Directly accessing the system memory comes with performance benefits, however it also leads to stability and protection issues, for example when an external device tries to access a chunk of memory it should not. An example is when a peripheral device writes to an address that is part of a system’s running process due to a software driver bug. That, in the best-case scenario, causes the process to terminate. However, one should also consider the serious security issue when an external device tries to maliciously read sensitive data and forward them to the network. In this case, the results could be catastrophic. Such issues led to creating mechanisms that apply the Virtual Memory concept to I/O devices.

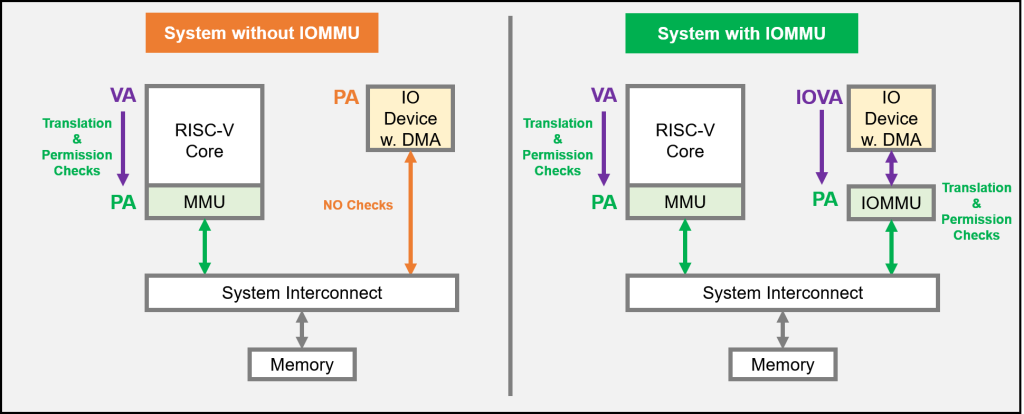

What is an IOMMU: Computer architects and designers already use Virtual Memory to resolve the equivalent CPU-related issues and the mechanism was then re-utilized for I/O devices (renamed to I/O virtual memory). The I/O Memory Management Unit (IOMMU) is the hardware unit responsible for the I/O virtual memory, which translates device-visible IO Virtual Addresses (IOVA) to Physical Addresses (PA), analogous to the CPU MMU, which translates application-visible Virtual Addresses (VA) to Physical Addresses (PA). The IOMMU performs address translation using typical radix page tables prepared by the OS (the same way it is done for CPU processes) and prevents unauthorized access to system memory via permission checks such as: read (R), write (W), execute (X). Figure 1 presents an example diagram of two systems: without IOMMU (left) and with IOMMU (right).

Figure 1: A diagram illustrating a system without IOMMU (left) vs a system with IOMMU (right).

Advantages of IOMMU: Some of the advantages of utilizing an IOMMU instead of using direct physical addressing of the memory include: (a) the ability to allocate large regions of memory without the need to be contiguous in physical memory as the IOMMU maps contiguous IO virtual addresses to the underlying fragmented physical addresses, (b) devices that do not support memory addresses long enough to address the entire physical memory can still address the entire memory through the IOMMU, avoiding overheads associated with copying buffers to and from the peripheral’s addressable memory space, (c) memory is protected from malicious devices attempting to access chunks of memory that are not allowed, (d) permits user-level access to IO devices and kernel bypass which offers significantly lower latency in high-performance applications, (e) in systems that run hypervisors and use virtualization, guest operating systems can utilize explicitly the hardware that supports I/O virtualization. High performance hardware such as graphics cards use DMA to access memory directly; in a virtual environment, all memory addresses are re-mapped by the virtual machine software, which would cause DMA devices to fail. The IOMMUs can handle such re-mappings, allowing the native OS device drivers to be used in a Guest Operating System.

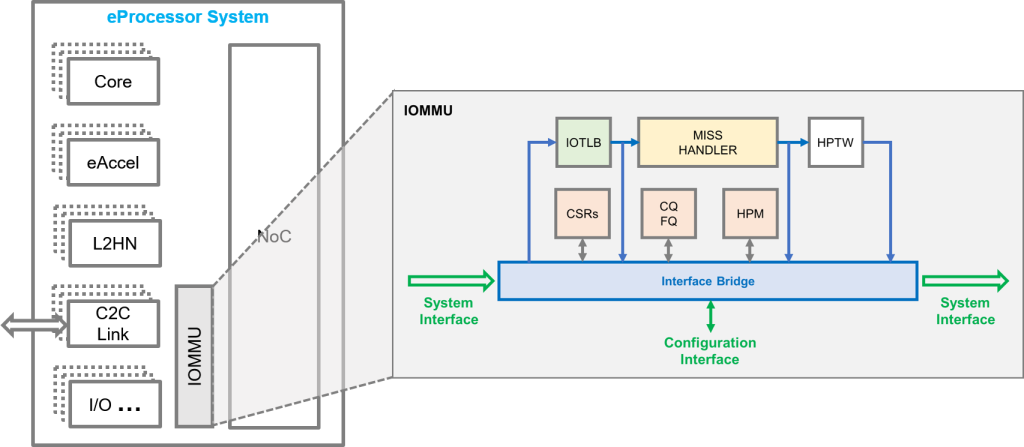

eProcessor IOMMU: eProcessor uses the open RISC-V ISA and official specifications for RISC-V IOMMUs were not available at the start of the project in 2021. FORTH’s Principal Investigator in eProcessor helped to start the RISC-V IOMMU Task Group for the RISC-V Foundation and was the Acting Chair. In eProcessor FORTH will develop the design of a RISC-V IOMMU IP that will be compliant with the official specifications and will be among the first RISC-V IOMMU implementations. The design will be utilized for IO devices connected to eProcessor systems via the off-chip Chip-to-Chip link and potentially other SoC I/O devices. The IOMMU will support multiple concurrent I/O devices assigned to multiple contexts (processes), will include IOTLBs with various caching structures with support for huge-pages, will perform hardware page table walks (HPTW) for standard 39-bit (Sv39) and 48-bit (Sv48) virtual addresses, i.e. 3-level and 4-level radix page tables respectively. The design will target high-performance and low-latency non-blocking operation with “hits-under-misses” and multiple concurrent hardware page table walks. The design will include the official Control and Status Registers (CSRs), the Command and Fault queues (CQ, FQ) and a hardware performance monitor (HPM). The system interface will be compliant the AMBA5 Coherent Hub Interface (CHI) and the configuration interface compliant with AXI. Moreover, FORTH will develop the necessary Linux Kernel support and IOMMU driver.

Figure 2: The RISC-V IOMMU in eProcessor.